As the use of technologies like facial recognition and smart home devices increase in daily life, many have begun to question the morality of these inventions, the ethics of their use, and how our own biases and worldviews impact the development of these smart tools.

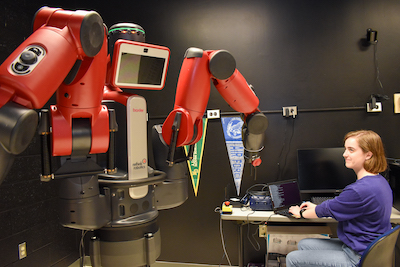

George Mason University researcher Elizabeth “Beth” Phillips is working with collaborators from labs around the country to answer these pressing questions about artificial intelligence and robotics in her role as the principal investigator for the university’s Applied Psychology and Autonomous System Lab (ALPHAS).

The ALPHAS Lab studies how to make robots, computer agents, and artificial intelligence (AI) into better teammates, partners, and companions. While computer science seems like the obvious field for this work, social sciences and humanities are critical to the development of effective, ethical, and efficient machines that humans can work with and alongside long term.

Student and faculty researchers in ALPHAS are exploring the benefits of using social robotic animals as therapeutic tools, how robots might conceptualize socialization norms to better fit into our lives, and how robots might justify and define morality in their actions, for example.

“You have to know a lot about humans in order to create successful robotics,” said Phillips, an assistant professor in human factors and applied cognition in the Department of Psychology. “How humans interact, how they work together, how they build trust in each other: these are the same building blocks in understanding how humans might interact with machines and can help us better establish how people might build trust in a variety of autonomous machines.”

One of the lab’s projects, “Improving Human-Machine Collaborations with Heterogeneous Multiagent Systems,” was a recipient of the 2022 Seed Funding Initiative out of Mason’s Office of Research, Innovation, and Economic Impact and in support of the Institute for Digital Innovation (IDIA). This project will be done in collaboration with co-investigators Ewart de Visser and Lt. Col. Chad Tossell of the Air Force Academy, and Air Force Academy cadets slated to commission as officers into the U.S. Space Force.

The humanities also provides perspective on ethics and morality in relation to robotics: what could it mean for a robot to be a moral agent? “The rules of moral philosophy vary based on culture and structure,” Phillips said. “When we think about robots as moral agents, what we’re really talking about is being able to translate a system of morality and ethics into an algorithm. It’s up to the humanities and social sciences to define these systems, as well as consider the implications of favoring one moral and ethical system over another.”

Learn more about AI Research at Mason

- February 17, 2026

- January 28, 2026

- January 22, 2026

- January 15, 2026

- January 15, 2026